Open Source’s Massive Unfair Advantage in the AI Era

Software ate the world.

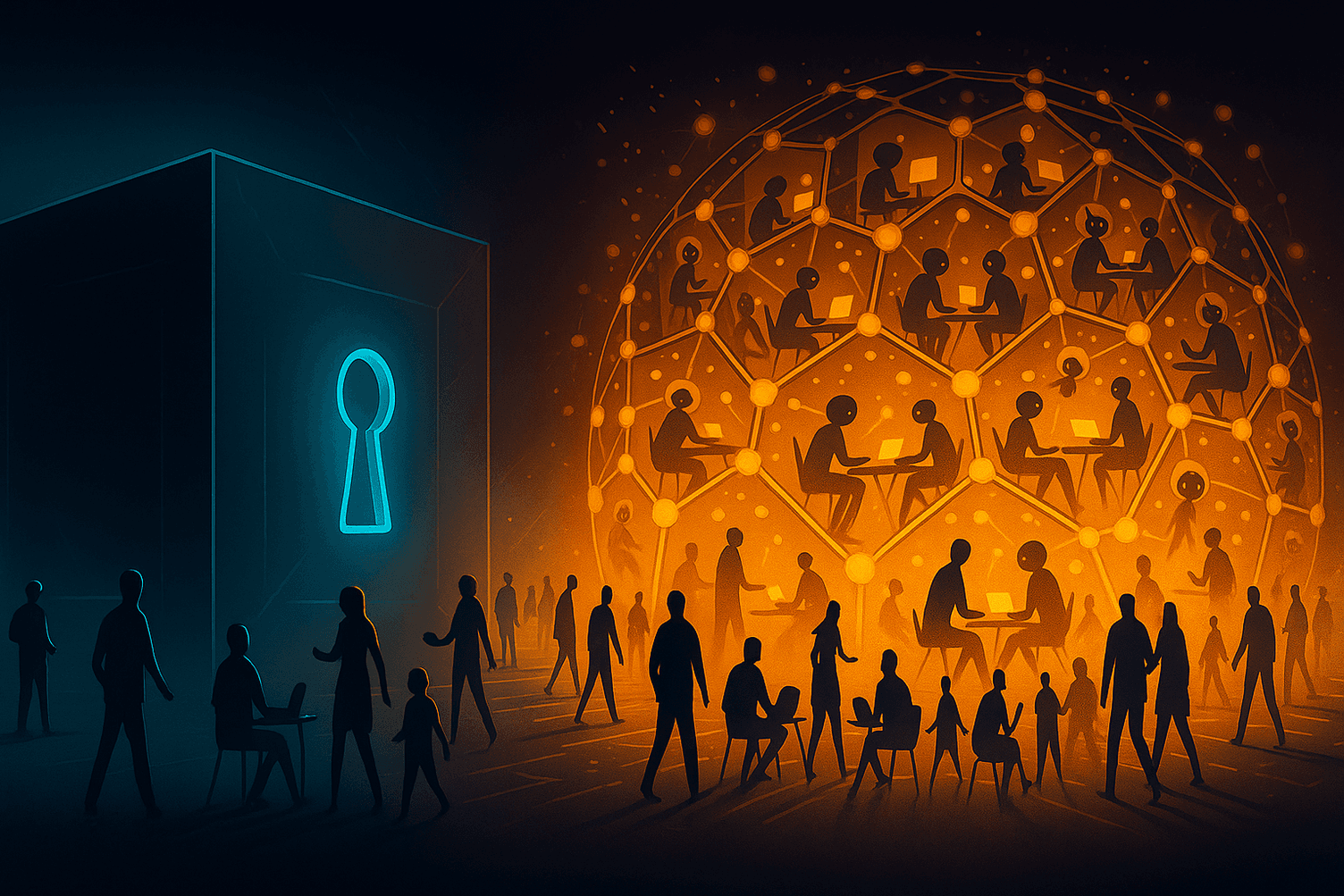

Now, open source is eating software, and AI is the ultimate catalyst. The relevance of proprietary software is thinning out, fast. As progress accelerates, it’s becoming more of a thin layers of business logic glued on top of open source libraries, running on open source infrastructure. Strip the open source out of most modern apps, and there’s not much left. And that trend is only accelerating.

We’ve been watching this play out for years. Infrastructure, databases, orchestration, ML, BI — open source has been quietly eating its way up and down the stack. AI just lit the afterburners.

OSS isn’t just better. It’s inevitable.

The core advantages of open source haven’t changed:

- Global collaboration through unbounded accessibility

- Transparent design debates

- Thousands of edge cases handled in public

- A growing universe of contributors that don’t need NDAs or contracts

AI didn’t create this shift. But it’s been voraciously consuming everything in the open while training. Every open source commit, PR, issue, and debate has been fuel. The models have absorbed the entire open source universe into their weights, building up deep context and understanding. So far, this has quietly supercharged open source acceleration behind the scenes while contributors engage with AI. But that was just phase one. An army of AI agents is rising, ready to actively engage, refactor, extend, and collaborate directly on top of open source itself.

Foundation models know open source inside and out

I’m not sure whether people realize just how much foundation models know about open source projects. The biggest foundation models already know about all of our code. They’ve read every line of code, public PR, issue thread, changelog, commit, SIP, mailing list post, blog, and doc tied to open source projects like Apache Superset.

It’s not just "awareness" of Superset. The models know that one edge case someone fixed in 2020. They know the obscure migration bug from version 0.37. They’ve seen every architecture tradeoff debated in public, every SIP (Superset Improvement Proposal), every backwards-compatibility hack argued over in GitHub comments at 2AM.

They know the modules nobody has touched in years. The brittle parts. The weird corners. The things only the most tenured contributors remember.

And all of it lives directly inside the neural net. Not as documents to retrieve from a vector database hoping it returns the right context and that it fits within the context window. It's baked into the weights.

Go ask your favorite model what it knows about Superset. Ask about a specific SIP, about a specific module, about architecture decisions and how the community got to a consensus. It knows, without calling an MCP service.

Then ask it what it knows about the Tableau codebase.

Exactly.

Proprietary vendors are flying blind

If you’re sitting on a decade of closed-source code, you’re invisible to the models. Your design choices, mistakes, debates: none of it is part of the world’s collective AI brain.

Sure, you can try to patch things up with RAG. You can fine-tune (good luck). Or you can roll the dice with open weights and custom training (I’m sorry if you think that’s viable or that it can compete against the latest models out there). You’re not catching up to foundation models that constantly train directly on the open source universe. You're just not.

We’re riding the wave with the latest model while proprietary software tries to fetch context and hack it in the context window.

Agents are naturally drawn to open source

Agents don’t need product roadmaps or sales pitches. They want code they can crawl, reason over, simulate against, refactor, optimize, and extend.

Open source projects become training scaffolds. Every PR makes the model better. Every design debate adds to its world model. Every bug sharpens its understanding. It’s baked deep into the models.

The models prefer open source because open source is the dataset.

Training destroys prompting

The information baked into the model’s weights does not compare to the context that’s provided in the prompt’s window — it’s a fundamentally different kind of knowledge. It’s pattern recognition. Abstraction. Intuition.

During training, when a model sees an obscure bug fix merged into Superset in 2020, or a heated design debate on a SIP thread, it doesn’t just memorize the text. It absorbs the pattern. The tradeoffs. The edge cases. It learns. The weights compress years of debates, mistakes, fixes, and architecture choices into something it can reason over instantly.

The context window? It might be more fundamentaly limited than you think. You can shove documents and retrieval hits into the prompt, but the model still has to process it token by token, left to right, every time. Transformers don’t reason holistically over the full context — they operate through local attention patterns and statistical prediction. There’s no true abstraction or compression happening in real time — just probabilistic token generation based on what’s immediately visible. It’s shallow pattern matching over a sliding window of text.

As Andrej Karpathy summarized: “Pretraining gives you knowledge. Prompting gives you short-term memory.”

And sure, you can try to patch it with RAG. You vectorize everything, hope for a semantic match, pick some chunks that fit in the window, and pray you retrieved what matters. Yes, context windows are getting bigger: million-token contexts, maybe infinite contexts are coming, but that doesn’t fix the real problem. Overloading the context window is like trying to cram your short-term memory with every possible detail right before an exam. It creates overload, distraction, and emergent confusion inside the model’s reasoning. Transformers don’t magically "understand" better with more tokens. They still predict token by token. Bigger windows just shift the tradeoffs. That problem isn’t solved and may never fully be.

Pretraining gives you muscle memory. Prompting hands you the manual.

That’s why foundation models trained on open source aren’t just ‘more aware’ — they’re fundamentally more capable.

The compound advantage is compounding harder

Open source was already winning on cost, flexibility, velocity, and ecosystem. Now you get:

- AI agents that can directly operate on OSS code.

- Infinite, parallelized refactoring, optimization, and test generation.

- OSS governance as the primary bottleneck, not engineering capacity, and agents today help scaling governance too

Software improvement is shifting from writing code to orchestrating agents. The agents trained on open source will move faster, be more accurate and need less orchestration. The ones hacking context windows to compensate for missing training data will always lag.

But it doesn’t stop at code. Agents are increasingly participating as first-class community members: collaborating with users, PMs, designers, domain experts, and even other specialized agents. Open source creates the open hive where this multi-agent collaboration can thrive. Proprietary vendors, locked behind walls, are simply cutting themselves off from this entire emergent dynamic. Good luck pulling the Llama’s weights and post-training that animal to learn new tricks. Let’s hope you have access to serious GPU resources, money, and the required know-how in house. We’ll be here riding the latest billion dollars model and the agent wave while you burn through capital and get dubious results.

At Preset, we’re seeing this play out. Engineering capacity isn't the limiter anymore — it’s figuring out how we can channel LLMs and agents, and letting them run within the right set of boundaries. It’s a different game.

The bottom line

Proprietary software is thinning fast, and directly into nothingness.

It simply isn’t a fair fight.

Open source software is sitting on a foundation deeply embedded into the world’s best models. Our code and public knowledge base is their training set. That’s a compounding advantage you can’t buy. You can only participate.

The next wave of software dominance won’t come from who writes the best code. It’ll come from who builds on top of the most AI-native substrate.

The not-so-sad reality: proprietary software is fading fast, and no one’s shedding a tear.

Join the revolution.

If you're re-evaluating your stack, don't just renew that vendor contract on autopilot. Try Superset. Try every open source tool you can get your hands on. The future is open, and it's moving fast.

Related Reading

- Dosu + Apache Superset — AI-powered issue management in action

- Kapa.ai + Apache Superset — AI transforming community support

- AI in BI: the Path to Full Self-Driving Analytics — How AI is changing analytics